This morning I noticed a tweet from @JaneBozarth, the Doctor O’ Learning who writes books and is the Worlds Worst Bureaucrat in Raleigh, NC. She was pimping an article she’d written for Learning Solutions Magazine on measuring the results of your e-learning, entitled “Nuts And Bolts: How To Evaluate e-Learning“.

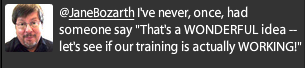

Always the snarky guy, I tweeted the link out to all my little followers, but then sent a comment direct to Jane:

I spend a lot of time promoting the idea of assessment in learning — and rarely, if ever, get much interest from clients in including that part of the project. Because it’s expensive, difficult, time-consuming and often embarrassing.

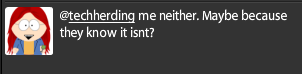

She responded quickly:

And that’s the sad truth. Lots of people don’t really want to know if their learning, “e” or otherwise is working. Because it’s hard to measure, it takes time and dedication, and (ultimately) you might just embarrass yourself.

So Why Bother To Measure At All?

Because I don’t create amazing training the first time I try. My first version is usually somewhere between “good” and “sucks”. I don’t spend a lot of time on it, it isn’t real glossy and pretty, and sometimes there are even some big empty gaps. But I quickly evaluate how well it worked — using the actual learning goals and the assessments we all agreed on at the beginning — and then go right back to designing.

By version #2, I’m usually at about “great”. But I don’t stop there. Now, I’m able to really start making things happen. I can add teaching suggestions, more interactions, alternate models, and lots of nice media and facilitation. Then I head back to designing.

By version #3, we’re up to “amazing”. Most people would hang it up. Not me. I’m drilling down on the 20% of the assessment questions that people are still missing. I’m asking the students what isn’t engaging them, the instructor what still feels stiff, and the client what they might have forgotten to include.

At version #4, we’ve reached “in-freakin-credible”. Go ahead and measure me. Bring it on! Want to talk about R.O. I.? I’ve got your ROI right here, sucka! Want to compare me to your PPT lectures? Go for it! Want to put your next training project out to the lowest bidder? Listen to me chuckle my evil chuckle!

So the next time somebody asks you whether you include assessment, just smile and say “of course — that’s where we start!”

{ 5 comments… read them below or add one }

You call that pimping? No, pimping would be if I said, “And don’t forget Jane Bozarth is the author of the hot-selling ‘Social Media for Trainers’ available now for e-readers and in paperback worldwide.”

See the difference?

Thanks!

Best,

Jane

@Jane I actually said you were pimping your magazine article not your Award Winning, Hot Selling, Amazingly Literate and Easily Read Book “Social Media For Trainers” which I’m hot-linking here to purchase easily at Amazon for the incredibly low-low price of only $36.00.

But, in an amazing coincidence, your confusion has given you yet another chance to pimp your book. How lucky you are!

(BTW — I would suggest that my readers NOT purchase any of those tattered “used” copies available for $30.69. Aside from the fact that you wouldn’t get a commission on them, there’s the possibility of anthrax spores or missing pages. Not to mention missing out on the random crisp $50 bills you insert at the factory.)

And there’s nothing wrong with pimping books. The Catholic Church has been doing it with their bestseller for years.

Thank you both for identifying one of the most prevalent issues in training today. Most every ID was taught to define and clarify true learning (performance) objectives and then how to evaluate objectives were achieved, then back into content (everyone would do well to follow Cathy Moore’s very useful advice on these points).

In practice, I see subject matter content wrangling followed by window dressing (“we need more ‘engaging’ interactions” being the rally to add pointless clicks for drag and drops, crosswords or other games that have zero application to the workplace). Assessment is almost always an afterthought tagged onto the end, generally designed around information recall. There is virtually zero planning for follow up for transfer to or impact on the workplace. Number of folks passing test, average scores, number of attempts, and other “burgers served” metrics is 90% of what I see across literally hundreds of training groups.

Embarrassing.

And, I agree with all your comments: most of what’s produced is garbage (Clark Quinn says is best: http://bit.ly/9kFBZm) so measuring for most, is a losing game (why “burgers served” is a favorite- not hard to run the measures and easy to run up the meter to hit targets). Even if your game is tight- measurement is tough work to do even moderately well- most people turn, extend arms, run away like they caught fire when approached with a discussion of some “real” measures.

But it is a sad state to see how far some have fallen from the basic principles taught and that style replaced substance for many. Given the economic challenges of today, pace of change, and amount of business that is driven by human capital vs any other asset on the balance sheet- learning professionals can, if committed, make the difference for organizations and prove contributions. In fact, most articles are showing the C-levels are demanding it- no more free rides (which is a great thing in my opinion – if you aren’t willing to stand behind your work, that says it all).

@David Thanks — I agree that I’m seeing more interest in actual measurement of whether or not training activities have any actual business impact, and I think that’s a good thing. If you install a new HVAC system in the warehouse, nobody would just accept a smile sheet that the rooms were comfortable. So what’s wrong with having actual goals and metrics before we start designing training?

I always love the blank looks on the faces of project teams when, early on, I start asking about specifics of assessment. I’m usually told that we’ll write those questions as part of the post-mortem, based on the final project. “So we’re sure that they match the content.”

Bang. Head. Here.

Yes, the “let’s do questions last to match content” is the typical cart-before the horse issue that has too many folks wrestling content and writing bad questions vs focusing on what matters to the business, unrelentingly focusing on what it will take to provide that to the business, and working toward it.

I’ll keep fighting though- despite the large bruise in the middle of my forehead.